Just like ftp.m.o, shift-reloaded, stage.mozilla.org is getting an overhaul as well. If you read through preed’s post that I just linked, you’ll see the plans for stage included in there as well. For various reasons (most of them including LDAP and testing issues) they ended up not happening at the same time, and we ended up doing only the ftp/archive part during that last outage window. We’re finally ready to proceed with the stage.m.o half of this, and the best part is for this chunk of the puzzle, we don’t even need an outage window. We’ll be running the new stage along side of the old one for a little while to let people try it out, make sure their upload scripts still work, and bring issues to our attention before the old one goes away.

The items listed on preed’s earlier post that still have to happen are:

- All files will be virus scanned before becoming available. We currently virus scan all builds, but depending on a number of factors, it was possible for unscanned-builds to appear on the FTP site for a window of time; we’ve removed this window.

- Interactive shell accounts on the FTP farm will be replaced with sftp-only accounts.

Non-interactive Accounts For Uploaders

One of the changes we’re making is that shell accounts are going away. The new server will have you chrooted into the staging area, and you will be limited to scp, sftp, rsync, and a small subset of file management commands (such as mv, cp, chmod, chown, chgrp, etc) invoked via ssh. This is the reason that if you have any scripted uploads, you need to test them on the new server to make sure they still work, and whether you can modify them so they will if they don’t. If you are doing something on stage currently that really needs full shell access, we’ll probably be happy to accomodate you, just on some other machine. Come talk to us or file a bug.

We are also moving from local accounts on the staging server to LDAP-based accounts, to make management of permissions easier. In a few cases, this might mean your username will change (in most cases, the affected people have been contacted already). Accounts that haven’t been used in the last year will not been ported over. If you haven’t connected to stage in the last year you’ll need to file a bug in the FTP: Staging component to get your access back. Accounts will be getting enabled over the course of the next 24 hours. If you want to get in sooner than that, come find me on irc and I’ll manually toggle your account. The new machine is located at stage-new.mozilla.org.

Virus Scanning

The ftp file tree was almost a terabyte in size before. Now, with it combined with archive.m.o, it’s 2.2 terabytes. Keeping just one copy of that sitting around is decently expensive. Keeping multiple copies of it live is just cost prohibitive. On the current staging system, for lack of disk space, there’s no way to prevent the files from going to the mirrors before getting virus scanned. The virus scanner would come along and scan the newly-uploaded files and then yank any with viruses found back out. So there is a small window of time when something with a virus in it could make it to the mirrors and then would disappear off the mirrors again the next time they synced.

With the new staging system, we’re making use of a new (to Linux — BSD has apparently had it for years) filesystem technology called unionfs which allows us to layer the filesystem. If it helps to visualize it, think of it like a multi-layer photo in Photoshop, where each layer is transparent by default, and each pixel in the photo is a file on the filesystem. When we make changes to a file, we’re only really changing the pixel in that position in the topmost layer (that pixel is now no longer transparent). From here on out, this post gets a little technical, to explain how it’s set up for those that are curious. If you’re not the technical type, you can feel free to stop reading here. There’s nothing beyond this point that will affect your ability to upload if you have an account.

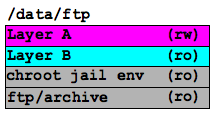

The main ftp tree (which is NFS-mounted from a huge disk array) gets mounted read-only as the “base layer” of a unionfs mount. We then put another layer on top of that which contains the chroot jail environment (the executables and libraries needed for jailed users to have minimal functionality while connected, but don’t need to be visible to the mirrors). There is another read-only layer on top of that (Layer B on the left) which is used for the virus scanning (more on that in a moment). The top layer (Layer A in the diagram) is the one that the end-users actually write to when they make changes to files. This last layer records all of the additions, deletions, and modifications to files that the users make so that those files appear changed to the unified filesystem view that the users see, but the underlying filesystem that the mirrors rsync off of isn’t touched.

The main ftp tree (which is NFS-mounted from a huge disk array) gets mounted read-only as the “base layer” of a unionfs mount. We then put another layer on top of that which contains the chroot jail environment (the executables and libraries needed for jailed users to have minimal functionality while connected, but don’t need to be visible to the mirrors). There is another read-only layer on top of that (Layer B on the left) which is used for the virus scanning (more on that in a moment). The top layer (Layer A in the diagram) is the one that the end-users actually write to when they make changes to files. This last layer records all of the additions, deletions, and modifications to files that the users make so that those files appear changed to the unified filesystem view that the users see, but the underlying filesystem that the mirrors rsync off of isn’t touched.

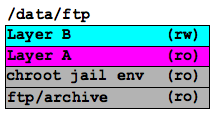

The layers can be reordered and changed from read-write to read-only at any time, so when it comes time to push the files to the mirrors, we move Layer B to the top and make it read/write, change Layer A to read-only. This way, nobody can make changes to it while we’re scanning it. We then virus-scan layer A directly. Once the scan completes, and any infected files have been removed, we then move the changes from this layer down to the real live ftp/archive layer at the bottom which the mirrors can see when they rsync. Layer A is then cleared off so it’s ready to be swapped with Layer B again on the next pass.

The layers can be reordered and changed from read-write to read-only at any time, so when it comes time to push the files to the mirrors, we move Layer B to the top and make it read/write, change Layer A to read-only. This way, nobody can make changes to it while we’re scanning it. We then virus-scan layer A directly. Once the scan completes, and any infected files have been removed, we then move the changes from this layer down to the real live ftp/archive layer at the bottom which the mirrors can see when they rsync. Layer A is then cleared off so it’s ready to be swapped with Layer B again on the next pass.